| Class | Alcohol | Malic Acid | Ash | Alcalinity of Ash | Magnesium | Total Phenols | Flavanoids | Nonflavanoid phenols | Proanthocyanins | Color Intensity | Hue | OD280/OD315 of diulted wines | Proline | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 14.23 | 1.71 | 2.43 | 15.6 | 127 | 2.80 | 3.06 | 0.28 | 2.29 | 5.64 | 1.04 | 3.92 | 1065 |

| 1 | 1 | 13.20 | 1.78 | 2.14 | 11.2 | 100 | 2.65 | 2.76 | 0.26 | 1.28 | 4.38 | 1.05 | 3.40 | 1050 |

| 2 | 1 | 13.16 | 2.36 | 2.67 | 18.6 | 101 | 2.80 | 3.24 | 0.30 | 2.81 | 5.68 | 1.03 | 3.17 | 1185 |

| 3 | 1 | 14.37 | 1.95 | 2.50 | 16.8 | 113 | 3.85 | 3.49 | 0.24 | 2.18 | 7.80 | 0.86 | 3.45 | 1480 |

| 4 | 1 | 13.24 | 2.59 | 2.87 | 21.0 | 118 | 2.80 | 2.69 | 0.39 | 1.82 | 4.32 | 1.04 | 2.93 | 735 |

5. Accuracy of Classifier¶

To see how well our classifier does, we might put 50% of the data into the training set and the other 50% into the test set. Basically, we are setting aside some data for later use, so we can use it to measure the accuracy of our classifier. We’ve been calling that the test set. Sometimes people will call the data that you set aside for testing a hold-out set, and they’ll call this strategy for estimating accuracy the hold-out method.

Note that this approach requires great discipline. Before you start applying machine learning methods, you have to take some of your data and set it aside for testing. You must avoid using the test set for developing your classifier: you shouldn’t use it to help train your classifier or tweak its settings or for brainstorming ways to improve your classifier. Instead, you should use it only once, at the very end, after you’ve finalized your classifier, when you want an unbiased estimate of its accuracy.

5.1. Measuring the Accuracy of Our Wine Classifier¶

OK, so let’s apply the hold-out method to evaluate the effectiveness of the \(k\)-nearest neighbor classifier for identifying wines. The data set has 178 wines, so we’ll randomly permute the data set and put 89 of them in the training set and the remaining 89 in the test set.

shuffled_wine = wine.sample(len(wine), replace=False)

training_set = shuffled_wine.take(np.arange(89))

training_set.head()

| Class | Alcohol | Malic Acid | Ash | Alcalinity of Ash | Magnesium | Total Phenols | Flavanoids | Nonflavanoid phenols | Proanthocyanins | Color Intensity | Hue | OD280/OD315 of diulted wines | Proline | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 1 | 13.16 | 2.36 | 2.67 | 18.6 | 101 | 2.80 | 3.24 | 0.30 | 2.81 | 5.68 | 1.03 | 3.17 | 1185 |

| 176 | 0 | 13.17 | 2.59 | 2.37 | 20.0 | 120 | 1.65 | 0.68 | 0.53 | 1.46 | 9.30 | 0.60 | 1.62 | 840 |

| 126 | 0 | 12.43 | 1.53 | 2.29 | 21.5 | 86 | 2.74 | 3.15 | 0.39 | 1.77 | 3.94 | 0.69 | 2.84 | 352 |

| 11 | 1 | 14.12 | 1.48 | 2.32 | 16.8 | 95 | 2.20 | 2.43 | 0.26 | 1.57 | 5.00 | 1.17 | 2.82 | 1280 |

| 39 | 1 | 14.22 | 3.99 | 2.51 | 13.2 | 128 | 3.00 | 3.04 | 0.20 | 2.08 | 5.10 | 0.89 | 3.53 | 760 |

test_set = shuffled_wine.take(np.arange(89, 178))

test_set.head()

| Class | Alcohol | Malic Acid | Ash | Alcalinity of Ash | Magnesium | Total Phenols | Flavanoids | Nonflavanoid phenols | Proanthocyanins | Color Intensity | Hue | OD280/OD315 of diulted wines | Proline | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 29 | 1 | 14.02 | 1.68 | 2.21 | 16.0 | 96 | 2.65 | 2.33 | 0.26 | 1.98 | 4.70 | 1.04 | 3.59 | 1035 |

| 99 | 0 | 12.29 | 3.17 | 2.21 | 18.0 | 88 | 2.85 | 2.99 | 0.45 | 2.81 | 2.30 | 1.42 | 2.83 | 406 |

| 156 | 0 | 13.84 | 4.12 | 2.38 | 19.5 | 89 | 1.80 | 0.83 | 0.48 | 1.56 | 9.01 | 0.57 | 1.64 | 480 |

| 24 | 1 | 13.50 | 1.81 | 2.61 | 20.0 | 96 | 2.53 | 2.61 | 0.28 | 1.66 | 3.52 | 1.12 | 3.82 | 845 |

| 95 | 0 | 12.47 | 1.52 | 2.20 | 19.0 | 162 | 2.50 | 2.27 | 0.32 | 3.28 | 2.60 | 1.16 | 2.63 | 937 |

We’ll train the classifier using the 89 wines in the training set, and evaluate how well it performs on the test set. To make our lives easier, we’ll write a function to evaluate a classifier on every wine in the test set:

def count_zero(array):

"""Counts the number of 0's in an array"""

return len(array) - np.count_nonzero(array)

def count_equal(array1, array2):

"""Takes two numerical arrays of equal length

and counts the indices where the two are equal"""

return count_zero(array1 - array2)

def evaluate_accuracy(training, test, k):

test_attributes = test.drop(columns=['Class'])

def classify_testrow(row):

return classify(training, row, k)

c = test_attributes.apply(classify_testrow, axis=1)

return count_equal(c, test['Class']) / len(test)

Now for the grand reveal – let’s see how we did. We’ll arbitrarily use \(k=5\).

evaluate_accuracy(training_set, test_set, 5)

0.8876404494382022

The accuracy rate isn’t bad at all for a simple classifier.

5.2. Breast Cancer Diagnosis¶

Now I want to do an example based on diagnosing breast cancer. I was inspired by Brittany Wenger, who won the Google national science fair in 2012 a 17-year old high school student. Here’s Brittany:

Brittany’s science fair project was to build a classification algorithm to diagnose breast cancer. She won grand prize for building an algorithm whose accuracy was almost 99%.

Let’s see how well we can do, with the ideas we’ve learned in this course.

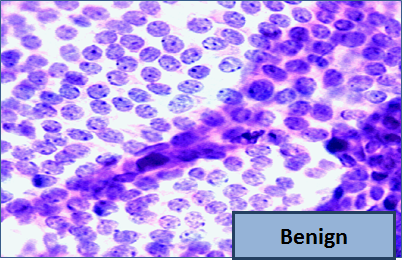

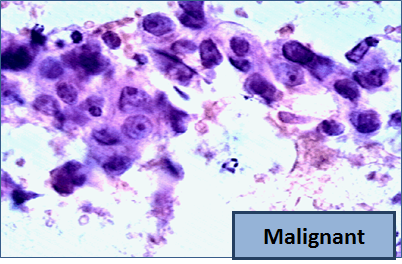

So, let me tell you a little bit about the data set. Basically, if a woman has a lump in her breast, the doctors may want to take a biopsy to see if it is cancerous. There are several different procedures for doing that. Brittany focused on fine needle aspiration (FNA), because it is less invasive than the alternatives. The doctor gets a sample of the mass, puts it under a microscope, takes a picture, and a trained lab tech analyzes the picture to determine whether it is cancer or not. We get a picture like one of the following:

Unfortunately, distinguishing between benign vs malignant can be tricky. So, researchers have studied the use of machine learning to help with this task. The idea is that we’ll ask the lab tech to analyze the image and compute various attributes: things like the typical size of a cell, how much variation there is among the cell sizes, and so on. Then, we’ll try to use this information to predict (classify) whether the sample is malignant or not. We have a training set of past samples from women where the correct diagnosis is known, and we’ll hope that our machine learning algorithm can use those to learn how to predict the diagnosis for future samples.

We end up with the following data set. For the “Class” column, 1 means malignant (cancer); 0 means benign (not cancer).

patients = pd.read_csv(path_data + 'breast-cancer.csv').drop(columns=['ID'])

patients.head(10)

| Clump Thickness | Uniformity of Cell Size | Uniformity of Cell Shape | Marginal Adhesion | Single Epithelial Cell Size | Bare Nuclei | Bland Chromatin | Normal Nucleoli | Mitoses | Class | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 5 | 1 | 1 | 1 | 2 | 1 | 3 | 1 | 1 | 0 |

| 1 | 5 | 4 | 4 | 5 | 7 | 10 | 3 | 2 | 1 | 0 |

| 2 | 3 | 1 | 1 | 1 | 2 | 2 | 3 | 1 | 1 | 0 |

| 3 | 6 | 8 | 8 | 1 | 3 | 4 | 3 | 7 | 1 | 0 |

| 4 | 4 | 1 | 1 | 3 | 2 | 1 | 3 | 1 | 1 | 0 |

| 5 | 8 | 10 | 10 | 8 | 7 | 10 | 9 | 7 | 1 | 1 |

| 6 | 1 | 1 | 1 | 1 | 2 | 10 | 3 | 1 | 1 | 0 |

| 7 | 2 | 1 | 2 | 1 | 2 | 1 | 3 | 1 | 1 | 0 |

| 8 | 2 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 5 | 0 |

| 9 | 4 | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 1 | 0 |

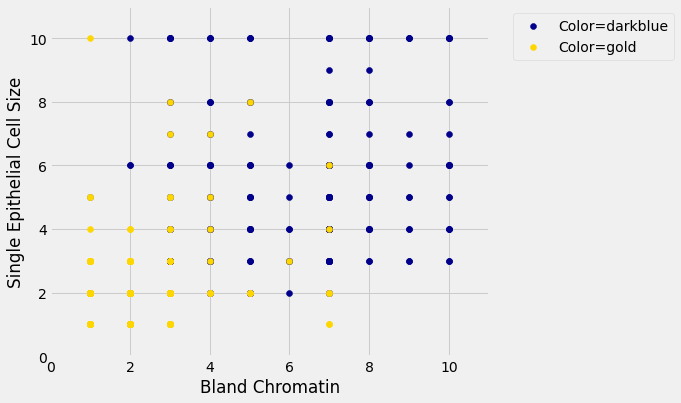

So we have 9 different attributes. I don’t know how to make a 9-dimensional scatterplot of all of them, so I’m going to pick two and plot them:

color_table = pd.DataFrame(

{'Class':np.array([1, 0]),

'Color':np.array(['darkblue', 'gold'])}

)

patients_with_colors = pd.merge(patients, color_table, on='Class')

patent_label = patients_with_colors.pop('Class')

patients_with_colors.insert(0, 'Class', patent_label)

patients_with_colors.head(3)

| Class | Clump Thickness | Uniformity of Cell Size | Uniformity of Cell Shape | Marginal Adhesion | Single Epithelial Cell Size | Bare Nuclei | Bland Chromatin | Normal Nucleoli | Mitoses | Color | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 5 | 1 | 1 | 1 | 2 | 1 | 3 | 1 | 1 | gold |

| 1 | 0 | 5 | 4 | 4 | 5 | 7 | 10 | 3 | 2 | 1 | gold |

| 2 | 0 | 3 | 1 | 1 | 1 | 2 | 2 | 3 | 1 | 1 | gold |

pwc_darkblue = patients_with_colors[patients_with_colors['Color'] == 'darkblue']

pwc_gold = patients_with_colors[patients_with_colors['Color'] == 'gold']

fig, ax = plt.subplots(figsize=(7,6))

ax.scatter(pwc_darkblue['Bland Chromatin'],

pwc_darkblue['Single Epithelial Cell Size'],

label='Color=darkblue',

color='darkblue')

ax.scatter(pwc_gold['Bland Chromatin'],

pwc_gold['Single Epithelial Cell Size'],

label='Color=gold',

color='gold')

x_label = 'Bland Chromatin'

y_label = 'Single Epithelial Cell Size'

plt.ylabel(y_label)

plt.xlabel(x_label)

ax.legend(bbox_to_anchor=(1.04,1), loc="upper left")

plt.xlim(0, 11)

plt.ylim(0, 11);

plt.show()

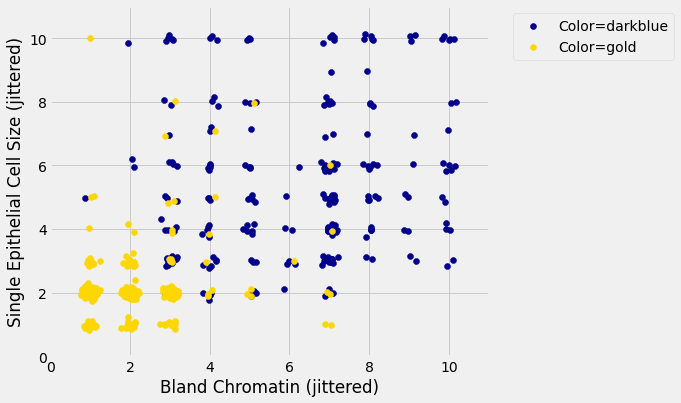

Oops. That plot is utterly misleading, because there are a bunch of points that have identical values for both the x- and y-coordinates. To make it easier to see all the data points, I’m going to add a little bit of random jitter to the x- and y-values. Here’s how that looks:

| Bland Chromatin (jittered) | Single Epithelial Cell Size (jittered) | Class | Color | |

|---|---|---|---|---|

| 0 | 2.914102 | 2.032149 | 0 | gold |

| 1 | 2.871193 | 6.909818 | 0 | gold |

| 2 | 3.017759 | 2.176561 | 0 | gold |

| 3 | 2.971252 | 3.046495 | 0 | gold |

| 4 | 3.067122 | 1.790860 | 0 | gold |

jwc_darkblue = jwc[jwc['Color'] == 'darkblue']

jwc_gold = jwc[jwc['Color'] == 'gold']

fig, ax = plt.subplots(figsize=(7,6))

ax.scatter(jwc_darkblue['Bland Chromatin (jittered)'],

jwc_darkblue['Single Epithelial Cell Size (jittered)'],

label='Color=darkblue',

color='darkblue')

ax.scatter(jwc_gold['Bland Chromatin (jittered)'],

jwc_gold['Single Epithelial Cell Size (jittered)'],

label='Color=gold',

color='gold')

x_label = 'Bland Chromatin (jittered)'

y_label = 'Single Epithelial Cell Size (jittered)'

plt.ylabel(y_label)

plt.xlabel(x_label)

ax.legend(bbox_to_anchor=(1.04,1), loc="upper left")

plt.xlim(0, 11)

plt.ylim(0, 11);

plt.show()

For instance, you can see there are lots of samples with chromatin = 2 and epithelial cell size = 2; all non-cancerous.

Keep in mind that the jittering is just for visualization purposes, to make it easier to get a feeling for the data. We’re ready to work with the data now, and we’ll use the original (unjittered) data.

First we’ll create a training set and a test set. The data set has 683 patients, so we’ll randomly permute the data set and put 342 of them in the training set and the remaining 341 in the test set.

shuffled_patients = patients.sample(683, replace=False)

training_set = shuffled_patients.take(np.arange(342))

test_set = shuffled_patients.take(np.arange(342, 683))

Let’s stick with 5 nearest neighbors, and see how well our classifier does.

evaluate_accuracy(training_set, test_set, 5)

Over 96% accuracy. Not bad! Once again, pretty darn good for such a simple technique.

As a footnote, you might have noticed that Brittany Wenger did even better. What techniques did she use? One key innovation is that she incorporated a confidence score into her results: her algorithm had a way to determine when it was not able to make a confident prediction, and for those patients, it didn’t even try to predict their diagnosis. Her algorithm was 99% accurate on the patients where it made a prediction – so that extension seemed to help quite a bit.