2. Visualizing Numerical Distributions¶

Many of the variables that data scientists study are quantitative or numerical. Their values are numbers on which you can perform arithmetic. Examples that we have seen include the number of periods in chapters of a book, the amount of money made by movies, and the age of people in the United States.

The values of a categorical variable can be given numerical codes, but that doesn’t make the variable quantitative. In the example in which we studied Census data broken down by age group, the categorial variable SEX had the numerical codes 1 for ‘Male,’ 2 for ‘Female,’ and 0 for the aggregate of both groups 1 and 2. While 0, 1, and 2 are numbers, in this context it doesn’t make sense to subtract 1 from 2, or take the average of 0, 1, and 2, or perform other arithmetic on the three values. SEX is a categorical variable even though the values have been given a numerical code.

For our main example, we will return to a dataset that we studied when we were visualizing categorical data. It is the table top, which consists of data from U.S.A.’s top grossing movies of all time. For convenience, here is the description of the table again.

The first column contains the title of the movie. The second column contains the name of the studio that produced the movie. The third contains the domestic box office gross in dollars, and the fourth contains the gross amount that would have been earned from ticket sales at 2016 prices. The fifth contains the release year of the movie.

There are 200 movies on the list. Here are the top ten according to the unadjusted gross receipts in the column Gross.

top = pd.read_csv(path_data + 'top_movies.csv')

top

| Title | Studio | Gross | Gross (Adjusted) | Year | |

|---|---|---|---|---|---|

| 0 | Star Wars: The Force Awakens | Buena Vista (Disney) | 906723418 | 906723400 | 2015 |

| 1 | Avatar | Fox | 760507625 | 846120800 | 2009 |

| 2 | Titanic | Paramount | 658672302 | 1178627900 | 1997 |

| 3 | Jurassic World | Universal | 652270625 | 687728000 | 2015 |

| 4 | Marvel's The Avengers | Buena Vista (Disney) | 623357910 | 668866600 | 2012 |

| ... | ... | ... | ... | ... | ... |

| 195 | The Caine Mutiny | Columbia | 21750000 | 386173500 | 1954 |

| 196 | The Bells of St. Mary's | RKO | 21333333 | 545882400 | 1945 |

| 197 | Duel in the Sun | Selz. | 20408163 | 443877500 | 1946 |

| 198 | Sergeant York | Warner Bros. | 16361885 | 418671800 | 1941 |

| 199 | The Four Horsemen of the Apocalypse | MPC | 9183673 | 399489800 | 1921 |

200 rows × 5 columns

# Make the numbers in the Gross and Gross (Adjusted) columns look nicer:

# Separate '000s with comma

# When using an original data set it is often good practice to work on a copy of the original

top1 = top.copy()

top1['Gross'] = top1['Gross'].apply('{:,}'.format)

top1['Gross (Adjusted)'] = top1['Gross (Adjusted)'].apply('{:,}'.format)

top1

| Title | Studio | Gross | Gross (Adjusted) | Year | |

|---|---|---|---|---|---|

| 0 | Star Wars: The Force Awakens | Buena Vista (Disney) | 906,723,418 | 906,723,400 | 2015 |

| 1 | Avatar | Fox | 760,507,625 | 846,120,800 | 2009 |

| 2 | Titanic | Paramount | 658,672,302 | 1,178,627,900 | 1997 |

| 3 | Jurassic World | Universal | 652,270,625 | 687,728,000 | 2015 |

| 4 | Marvel's The Avengers | Buena Vista (Disney) | 623,357,910 | 668,866,600 | 2012 |

| ... | ... | ... | ... | ... | ... |

| 195 | The Caine Mutiny | Columbia | 21,750,000 | 386,173,500 | 1954 |

| 196 | The Bells of St. Mary's | RKO | 21,333,333 | 545,882,400 | 1945 |

| 197 | Duel in the Sun | Selz. | 20,408,163 | 443,877,500 | 1946 |

| 198 | Sergeant York | Warner Bros. | 16,361,885 | 418,671,800 | 1941 |

| 199 | The Four Horsemen of the Apocalypse | MPC | 9,183,673 | 399,489,800 | 1921 |

200 rows × 5 columns

2.1. Visualizing the Distribution of the Adjusted Receipts¶

In this section we will draw graphs of the distribution of the numerical variable in the column Gross (Adjusted). For simplicity, let’s create a smaller table that has the information that we need. And since three-digit numbers are easier to work with than nine-digit numbers, let’s measure the Adjusted Gross receipts in millions of dollars. Note how round is used to retain only two decimal places.

millions = pd.DataFrame({'Title': top['Title'], 'Adjusted Gross': np.round((top['Gross (Adjusted)']/1e6), 2)})

millions

| Title | Adjusted Gross | |

|---|---|---|

| 0 | Star Wars: The Force Awakens | 906.72 |

| 1 | Avatar | 846.12 |

| 2 | Titanic | 1178.63 |

| 3 | Jurassic World | 687.73 |

| 4 | Marvel's The Avengers | 668.87 |

| ... | ... | ... |

| 195 | The Caine Mutiny | 386.17 |

| 196 | The Bells of St. Mary's | 545.88 |

| 197 | Duel in the Sun | 443.88 |

| 198 | Sergeant York | 418.67 |

| 199 | The Four Horsemen of the Apocalypse | 399.49 |

200 rows × 2 columns

2.1.1. A Histogram¶

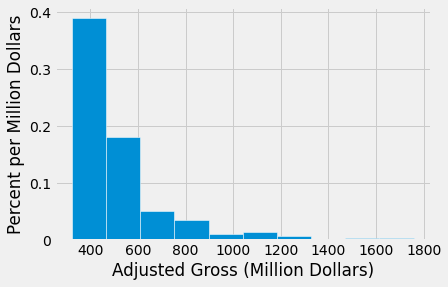

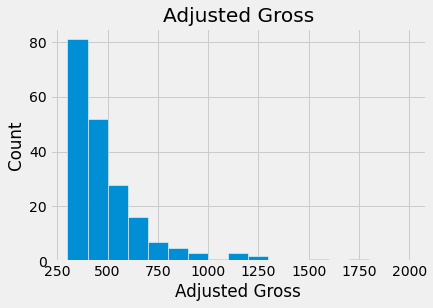

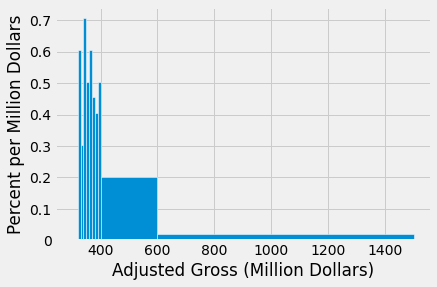

A histogram of a numerical dataset looks very much like a bar chart, though it has some important differences that we will examine in this section. First, let’s just draw a histogram of the adjusted receipts.

The hist method generates a histogram of the values in a column. The histogram shows the distribution of the adjusted gross amounts, in millions of 2016 dollars.

unit = 'Million Dollars'

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], 10, density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

2.1.2. The Horizontal Axis¶

The amounts have been grouped into contiguous intervals called bins. Although in this dataset no movie grossed an amount that is exactly on the edge between two bins, hist does have to account for situations where there might have been values at the edges. So hist has an endpoint convention: bins include the data at their left endpoint, but not the data at their right endpoint.

We will use the notation [a, b) for the bin that starts at a and ends at b but doesn’t include b.

Sometimes, adjustments have to be made in the first or last bin, to ensure that the smallest and largest values of the variable are included. You saw an example of such an adjustment in the Census data studied earlier, where an age of “100” years actually meant “100 years old or older.”

We can see that there are 10 bins (some bars are so low that they are hard to see), and that they all have the same width. We can also see that none of the movies grossed fewer than 300 million dollars; that is because we are considering only the top grossing movies of all time.

It is a little harder to see exactly where the ends of the bins are situated. For example, it is not easy to pinpoint exactly where the value 500 lies on the horizontal axis. So it is hard to judge exactly where one bar ends and the next begins.

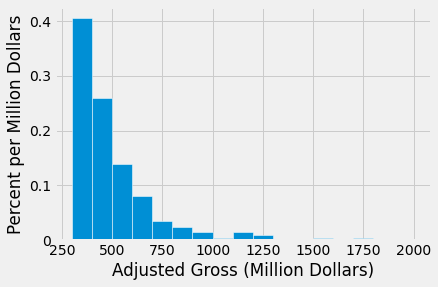

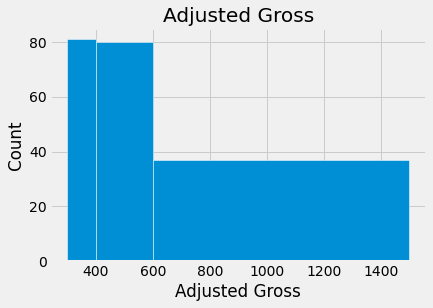

The optional argument bins can be used with hist to specify the endpoints of the bins. It must consist of a sequence of numbers that starts with the left end of the first bin and ends with the right end of the last bin. We will start by setting the numbers in bins to be 300, 400, 500, and so on, ending with 2000.

Note defining bin values the y-axis scale has changed to 0.00 - 0.04

unit = 'Million Dollars'

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], bins=np.arange(300,2001,100), density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

The horizontal axis of this figure is easier to read. The labels 200, 400, 600, and so on are centered at the corresponding values. The tallest bar is for movies that grossed between 300 million and 400 million dollars.

A very small number of movies grossed 800 million dollars or more. This results in the figure being “skewed to the right,” or, less formally, having “a long right hand tail.” Distributions of variables like income or rent in large populations also often have this kind of shape.

2.1.3. The Counts in the Bins¶

The counts of values in the bins can be computed from a table using the .value_counts() method, which takes a column label or index and an optional sequence or number of bins. The result is a tabular form of a histogram. The first column lists the bin ranges (but see the note about the final value, below). The second column contains the counts of all values in the Adjusted Gross column that are in the corresponding bin. That is, it counts all the Adjusted Gross values that are greater than or equal to the value in bin, but less than the next value in bin.

bin_counts = millions['Adjusted Gross']

bin_counts = pd.DataFrame(bin_counts.value_counts(bins=(np.arange(300,2001,100))))

bin_counts

| Adjusted Gross | |

|---|---|

| (299.999, 400.0] | 81 |

| (400.0, 500.0] | 52 |

| (500.0, 600.0] | 28 |

| (600.0, 700.0] | 16 |

| (700.0, 800.0] | 7 |

| (800.0, 900.0] | 5 |

| (1100.0, 1200.0] | 3 |

| (900.0, 1000.0] | 3 |

| (1200.0, 1300.0] | 2 |

| (1000.0, 1100.0] | 1 |

| (1500.0, 1600.0] | 1 |

| (1700.0, 1800.0] | 1 |

| (1300.0, 1400.0] | 0 |

| (1400.0, 1500.0] | 0 |

| (1600.0, 1700.0] | 0 |

| (1800.0, 1900.0] | 0 |

| (1900.0, 2000.0] | 0 |

bin_counts_norm = millions['Adjusted Gross']

bin_counts_norm = pd.DataFrame(bin_counts_norm.value_counts(normalize=True,bins=(np.arange(300,2001,100))))

bin_counts_norm

| Adjusted Gross | |

|---|---|

| (299.999, 400.0] | 0.405 |

| (400.0, 500.0] | 0.260 |

| (500.0, 600.0] | 0.140 |

| (600.0, 700.0] | 0.080 |

| (700.0, 800.0] | 0.035 |

| (800.0, 900.0] | 0.025 |

| (1100.0, 1200.0] | 0.015 |

| (900.0, 1000.0] | 0.015 |

| (1200.0, 1300.0] | 0.010 |

| (1000.0, 1100.0] | 0.005 |

| (1500.0, 1600.0] | 0.005 |

| (1700.0, 1800.0] | 0.005 |

| (1300.0, 1400.0] | 0.000 |

| (1400.0, 1500.0] | 0.000 |

| (1600.0, 1700.0] | 0.000 |

| (1800.0, 1900.0] | 0.000 |

| (1900.0, 2000.0] | 0.000 |

2.1.3.1. Splitting data¶

in the next step we take the index of ‘bin_counts_norm’, reset the index so that we create an ‘intervals’ column which can then be split into ‘left’ and ‘right’ elements.

interval_split = pd.DataFrame({'intervals': bin_counts_norm.index})

interval_split['left'] = interval_split['intervals'].array.left

interval_split['right'] = interval_split['intervals'].array.right

interval_split

| intervals | left | right | |

|---|---|---|---|

| 0 | (299.999, 400.0] | 299.999 | 400.0 |

| 1 | (400.0, 500.0] | 400.000 | 500.0 |

| 2 | (500.0, 600.0] | 500.000 | 600.0 |

| 3 | (600.0, 700.0] | 600.000 | 700.0 |

| 4 | (700.0, 800.0] | 700.000 | 800.0 |

| 5 | (800.0, 900.0] | 800.000 | 900.0 |

| 6 | (1100.0, 1200.0] | 1100.000 | 1200.0 |

| 7 | (900.0, 1000.0] | 900.000 | 1000.0 |

| 8 | (1200.0, 1300.0] | 1200.000 | 1300.0 |

| 9 | (1000.0, 1100.0] | 1000.000 | 1100.0 |

| 10 | (1500.0, 1600.0] | 1500.000 | 1600.0 |

| 11 | (1700.0, 1800.0] | 1700.000 | 1800.0 |

| 12 | (1300.0, 1400.0] | 1300.000 | 1400.0 |

| 13 | (1400.0, 1500.0] | 1400.000 | 1500.0 |

| 14 | (1600.0, 1700.0] | 1600.000 | 1700.0 |

| 15 | (1800.0, 1900.0] | 1800.000 | 1900.0 |

| 16 | (1900.0, 2000.0] | 1900.000 | 2000.0 |

lower_limit = np.round(interval_split['left'],0)

lower_limit = lower_limit.astype(int)

lower_limit = list(lower_limit)

lower_limit

[300,

400,

500,

600,

700,

800,

1100,

900,

1200,

1000,

1500,

1700,

1300,

1400,

1600,

1800,

1900]

Notice the bin value 2000 in the last row. That’s not the left end-point of any bar – it’s the right end point of the last bar. By the endpoint convention, the data there are not included. So the corresponding count is recorded as 0, and would have been recorded as 0 even if there had been movies that made more than $2,000$ million dollars. When either bin or hist is called with a bins argument, the graph only considers values that are in the specified bins.

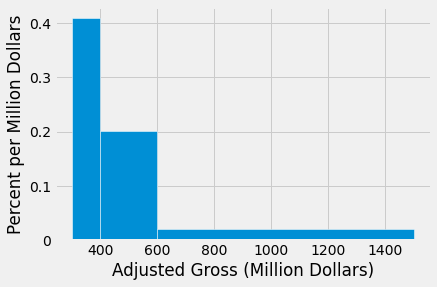

Once values have been binned, the resulting counts can be used to generate a bar chart using df lower_limit typed as a list.

# using matplotlib

width = lower_limit[1] - lower_limit[0]

plt.bar(lower_limit, bin_counts_norm['Adjusted Gross'], align='center', width=width, ec='white')

plt.xlabel('Adjusted Gross (Million Dollars)')

plt.ylabel('Percent per Million Dollars')

plt.show()

2.1.4. The Vertical Axis: Density Scale¶

The horizontal axis of a histogram is straightforward to read, once we have taken care of details like the ends of the bins. The features of the vertical axis require a little more attention. We will go over them one by one.

Let’s start by examining how to calculate the numbers on the vertical axis. If the calculation seems a little strange, have patience – the rest of the section will explain the reasoning.

Calculation. The height of each bar is the percent of elements that fall into the corresponding bin, relative to the width of the bin.

counts = bin_counts

counts = counts.rename(columns={'Adjusted Gross': 'Count'})

percents = counts

percents['Percent'] = (counts['Count']/200)*100

percents

heights = percents

heights['Height'] = percents['Percent']/100

heights

| Count | Percent | Height | |

|---|---|---|---|

| (299.999, 400.0] | 81 | 40.5 | 0.405 |

| (400.0, 500.0] | 52 | 26.0 | 0.260 |

| (500.0, 600.0] | 28 | 14.0 | 0.140 |

| (600.0, 700.0] | 16 | 8.0 | 0.080 |

| (700.0, 800.0] | 7 | 3.5 | 0.035 |

| (800.0, 900.0] | 5 | 2.5 | 0.025 |

| (1100.0, 1200.0] | 3 | 1.5 | 0.015 |

| (900.0, 1000.0] | 3 | 1.5 | 0.015 |

| (1200.0, 1300.0] | 2 | 1.0 | 0.010 |

| (1000.0, 1100.0] | 1 | 0.5 | 0.005 |

| (1500.0, 1600.0] | 1 | 0.5 | 0.005 |

| (1700.0, 1800.0] | 1 | 0.5 | 0.005 |

| (1300.0, 1400.0] | 0 | 0.0 | 0.000 |

| (1400.0, 1500.0] | 0 | 0.0 | 0.000 |

| (1600.0, 1700.0] | 0 | 0.0 | 0.000 |

| (1800.0, 1900.0] | 0 | 0.0 | 0.000 |

| (1900.0, 2000.0] | 0 | 0.0 | 0.000 |

Go over the numbers on the vertical axis of the histogram above to check that the column Heights looks correct.

The calculations will become clear if we just examine the first row of the table.

Remember that there are 200 movies in the dataset. The [300, 400) bin contains 81 movies. That’s 40.5% of all the movies:

The width of the [300, 400) bin is \( 400 - 300 = 100\). So

The code for calculating the heights used the facts that there are 200 movies in all and that the width of each bin is 100.

Units. The height of the bar is 40.5% divided by 100 million dollars, and so the height is 0.405% per million dollars.

This method of drawing histograms creates a vertical axis that is said to be on the density scale. The height of bar is not the percent of entries in the bin; it is the percent of entries in the bin relative to the amount of space in the bin. That is why the height measures crowdedness or density.

Let’s see why this matters.

2.1.5. Unequal Bins¶

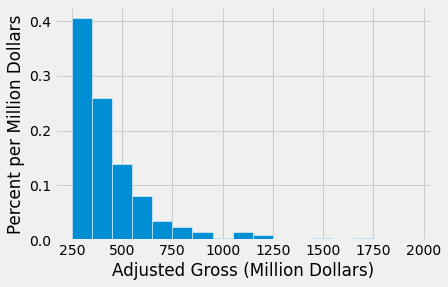

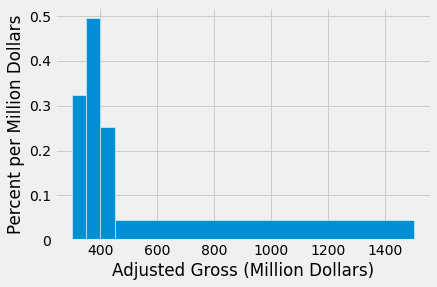

An advantage of the histogram over a bar chart is that a histogram can contain bins of unequal width. Below, the values in the Millions column are binned into three uneven categories.

uneven = np.array([300, 400, 600, 1500])

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], bins=uneven, density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

Here are the counts in the three bins.

bin_counts_uneven = millions['Adjusted Gross']

bin_counts_uneven = pd.DataFrame(bin_counts_uneven.value_counts(bins=uneven))

bin_counts_uneven

| Adjusted Gross | |

|---|---|

| (299.999, 400.0] | 81 |

| (400.0, 600.0] | 80 |

| (600.0, 1500.0] | 37 |

Although the ranges [300, 400) and [400, 600) have nearly identical counts, the bar over the former is twice as tall as the latter because it is only half as wide. The density of values in the [300, 400) is twice as much as the density in [400, 600).

Histograms help us visualize where on the number line the data are most concentrated, especially when the bins are uneven.

2.1.6. The Problem with Simply Plotting Counts¶

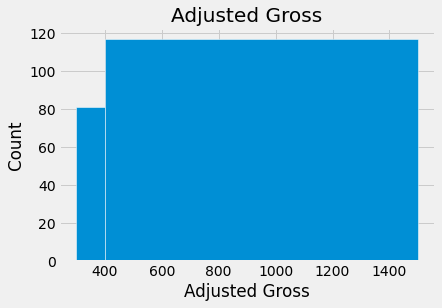

It is possible to display counts directly in a chart, using the normed=False option of the hist method. The resulting chart has the same shape as a histogram when the bins all have equal widths, though the numbers on the vertical axis are different.

millions.hist('Adjusted Gross', density=False, bins=np.arange(300,2001,100), ec='white')

plt.xlabel('Adjusted Gross')

plt.ylabel('Count')

plt.show()

While the count scale is perhaps more natural to interpret than the density scale, the chart becomes highly misleading when bins have different widths. Below, it appears (due to the count scale) that high-grossing movies are quite common, when in fact we have seen that they are relatively rare.

millions.hist('Adjusted Gross', density=False, bins=uneven, ec='white')

plt.xlabel('Adjusted Gross')

plt.ylabel('Count')

plt.show()

Even though the method used is called hist, the figure above is NOT A HISTOGRAM. It misleadingly exaggerates the proportion of movies grossing at least 600 million dollars. The height of each bar is simply plotted at the number of movies in the bin, without accounting for the difference in the widths of the bins.

The picture becomes even more absurd if the last two bins are combined.

very_uneven = np.array([300, 400, 1500])

millions.hist('Adjusted Gross', density=False, bins=very_uneven, ec='white')

plt.xlabel('Adjusted Gross')

plt.ylabel('Count')

plt.show()

In this count-based figure, the shape of the distribution of movies is lost entirely.

2.1.7. The Histogram: General Principles and Calculation¶

The figure above shows that what the eye perceives as “big” is area, not just height. This observation becomes particularly important when the bins have different widths.

That is why a histogram has two defining properties:

The bins are drawn to scale and are contiguous (though some might be empty), because the values on the horizontal axis are numerical.

The area of each bar is proportional to the number of entries in the bin.

Property 2 is the key to drawing a histogram, and is usually achieved as follows:

The calculation of the heights just uses the fact that the bar is a rectangle:

and so

The units of height are “percent per unit on the horizontal axis.”

When drawn using this method, the histogram is said to be drawn on the density scale. On this scale:

The area of each bar is equal to the percent of data values that are in the corresponding bin.

The total area of all the bars in the histogram is 100%. Speaking in terms of proportions, we say that the areas of all the bars in a histogram “sum to 1”.

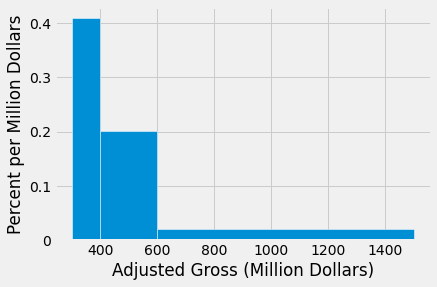

2.1.8. Flat Tops and the Level of Detail¶

Even though the density scale correctly represents percents using area, some detail is lost by grouping values into bins.

Take another look at the [300, 400) bin in the figure below. The flat top of the bar, at the level 0.405% per million dollars, hides the fact that the movies are somewhat unevenly distributed across that bin.

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], bins=uneven, density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

To see this, let us split the [300, 400) bin into 10 narrower bins, each of width 10 million dollars.

some_tiny_bins = np.array([300, 310, 320, 330, 340, 350, 360, 370, 380, 390, 400, 600, 1500])

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], bins=some_tiny_bins, density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

Some of the skinny bars are taller than 0.405 and others are shorter; the first two have heights of 0 because there are no data between 300 and 320. By putting a flat top at the level 0.405 across the whole bin, we are deciding to ignore the finer detail and are using the flat level as a rough approximation. Often, though not always, this is sufficient for understanding the general shape of the distribution.

The height as a rough approximation. This observation gives us a different way of thinking about the height. Look again at the [300, 400) bin in the earlier histograms. As we have seen, the bin is 100 million dollars wide and contains 40.5% of the data. Therefore the height of the corresponding bar is 0.405% per million dollars.

Now think of the bin as consisting of 100 narrow bins that are each 1 million dollars wide. The bar’s height of “0.405% per million dollars” means that as a rough approximation, 0.405% of the movies are in each of those 100 skinny bins of width 1 million dollars.

Notice that because we have the entire dataset that is being used to draw the histograms, we can draw the histograms to as fine a level of detail as the data and our patience will allow. However, if you are looking at a histogram in a book or on a website, and you don’t have access to the underlying dataset, then it becomes important to have a clear understanding of the “rough approximation” created by the flat tops.

2.1.9. Histograms Q&A¶

Let’s draw the histogram again, this time with four bins, and check our understanding of the concepts.

uneven_again = np.array([300, 350, 400, 450, 1500])

fig, ax1 = plt.subplots()

ax1.hist(millions['Adjusted Gross'], bins=uneven_again, density=True, ec='white')

y_vals = ax1.get_yticks()

y_label = 'Percent per ' + (unit if unit else 'unit')

x_label = 'Adjusted Gross (' + (unit if unit else 'unit') + ')'

ax1.set_yticklabels(['{:g}'.format(x * 100) for x in y_vals])

plt.ylabel(y_label)

plt.xlabel(x_label)

plt.show()

bin_counts_uneven_again = millions['Adjusted Gross']

bin_counts_uneven_again = pd.DataFrame(bin_counts_uneven_again.value_counts(bins=uneven_again))

bin_counts_uneven_again

| Adjusted Gross | |

|---|---|

| (450.0, 1500.0] | 92 |

| (350.0, 400.0] | 49 |

| (299.999, 350.0] | 32 |

| (400.0, 450.0] | 25 |

Look again at the histogram, and compare the [400, 450) bin with the [450, 1500) bin.

Q: Which has more movies in it?

A: The [450, 1500) bin. It has 92 movies, compared with 25 movies in the [400, 450) bin.

Q: Then why is the [450, 1500) bar so much shorter than the [400, 450) bar?

A: Because height represents density per unit of space in the bin, not the number of movies in the bin. The [450, 1500) bin does have more movies than the [400, 450) bin, but it is also a whole lot wider. So it is less crowded. The density of movies in it is much lower.

2.1.10. Differences Between Bar Charts and Histograms¶

Bar charts display one quantity per category. They are often used to display the distributions of categorical variables. Histograms display the distributions of quantitative variables.

All the bars in a bar chart have the same width, and there is an equal amount of space between consecutive bars. The bars of a histogram can have different widths, and they are contiguous.

The lengths (or heights, if the bars are drawn vertically) of the bars in a bar chart are proportional to the value for each category. The heights of bars in a histogram measure densities; the areas of bars in a histogram are proportional to the numbers of entries in the bins.